Why are some pickled NLP models so large?!

Problem.

For some NLP models, when we pickle the trained model, it’s size on disk is too large, regardless of the limited number of features used in it, and this will take so much memory at the inference time.

Solution.

Before jumping to solution, let’s see an example.

As an example, let’s look at Detecting Clickbaits (3/4) - Logistic Regression.

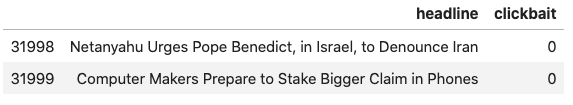

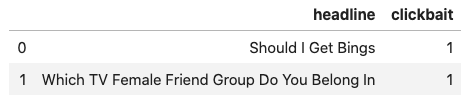

There, the NLP is trying to use a set of 32000 headlines and their labels, whether that headline is a clickbait (label 1) or

not (label 0), to build a model to detect clickbait headlines. Let’s remind ourselves

the steps:

Read data:

df = pd.read_csv("https://raw.github.com/hminooei/DSbyHadi/master/data/clickbait_data.csv.zip")

df.tail(2)

df.head(2)

Split into train/validation/test sets:

text_train_val, text_test, label_train_val, label_test = train_test_split(

df["headline"],

df["clickbait"],

test_size=0.25,

stratify=df["clickbait"],

random_state=9)

# Split the train_val dataset to train and validation separete portions.

text_train, text_val, label_train, label_val = train_test_split(

text_train_val,

label_train_val,

test_size=0.2,

random_state=9)Define a function that builds a pipeline line consisting of CountVectorizer,

TfidfTransformer (note that you can combine these two and use TfidfVectorizer),

and LogisticRegression stages so that you can pass different parameters to it

for tuning:

def train_measure_model(text_train, label_train, text_val, label_val,

cv_binary, cv_analyzer, cv_ngram, cv_max_features,

cv_have_tfidf, cv_use_idf, cfr_penalty, cfr_C, stop_words=None,

text_column_name="headline"):

cv = CountVectorizer(binary=cv_binary, stop_words=stop_words,

analyzer=cv_analyzer,

ngram_range=cv_ngram[1:3],

max_features=cv_max_features)

if cv_have_tfidf:

pipeline = Pipeline(steps=[("vectorizer", cv),

("tfidf", TfidfTransformer(use_idf=cv_use_idf)),

("classifier", LogisticRegression(penalty=cfr_penalty,

C=cfr_C,

random_state=9,

max_iter=100,

n_jobs=None))])

else:

pipeline = Pipeline(steps=[("vectorizer", cv),

("classifier", LogisticRegression(penalty=cfr_penalty,

C=cfr_C,

random_state=9,

max_iter=100,

n_jobs=None))])

pipeline.fit(text_train, label_train)

return pipelineand finally training a pipeline model with the following parameters:

cfr_pipeline = train_measure_model(text_train, label_train, text_val, label_val,

cv_binary=True, cv_analyzer="word", cv_ngram=("w", 1, 3),

cv_max_features=5000, cv_have_tfidf=True, cv_use_idf=True,

cfr_penalty="l2", cfr_C=1.0, stop_words=None)If we just pickle cfr_pipeline,

import pickle

model_name = 'clickbait-model.pkl'

pickle.dump(cfr_pipeline, open(model_name, 'wb'), protocol=2)its size on disk would be 5.9 MB although we are only using 5000 features (which are

1-3 ngrams).

The reason is stop_words_ attribute in CountVectorizer. Looking at

its documentation

it says:

stop_words_ : set

Terms that were ignored because they either:

- occurred in too many documents (max_df)

- occurred in too few documents (min_df)

- were cut off by feature selection (max_features).

This is only available if no vocabulary was given.

and

Notes

—–

Thestop_words_attribute can get large and increase the model size

when pickling. This attribute is provided only for introspection and can

be safely removed using delattr or set to None before pickling.

So to resolve the size issue, we can set the stop_words_ to None, i.e.:

import pickle

model_name = 'clickbait-model-sm.pkl'

cfr_pipeline.named_steps.vectorizer.stop_words_ = None

pickle.dump(cfr_pipeline, open(model_name, 'wb'), protocol=2)And now the size of pickled model is 506KB. Voila!

Note that in this case, our initial pickled model size was not that large (5.9 MB only)

because our training texts are small (headlines are a few words only) and hence the set

of all 1-3 ngrams is not too large.

However in case of larger text bodies in the training set (reviews/tweets/news/etc.),

the size of pickled model can easily get over 1-2 GB even if the number of features

is 1000 which takes too long for the model

to load into memory and multiple Gigs of RAM at the inference stage. Whereas by using

the above solution, its size shrinks to less than 100 KB!